It usually starts with a Monday morning Slack message. Traffic is down 40%. Nobody changed anything over the weekend — or so everyone thinks. Then you pull Google Search Console and find several hundred pages listed as “Excluded by noindex tag.” Nobody applied a noindex directive. Except someone did, during a Friday deployment, when a staging environment configuration made it into production and nobody had a post-deploy verification step in place. The pages were live. The content was there. But to Google, the site had essentially gone dark.

This scenario plays out constantly, across sites of every size. The frustrating part is not that technical SEO implementation mistakes happen — they are inevitable in any development workflow. The frustrating part is how long they go undetected, and how poorly most teams are equipped to diagnose them once they surface. Most published guides on technical SEO implementation mistakes treat the subject as a flat list: here are twenty things that can go wrong, here is how to fix each one. That approach collapses the entire diagnostic problem into a lookup exercise, and it fails badly when multiple issues exist simultaneously, when the root cause is buried two layers beneath the visible symptom, or when the mistake was introduced by a deployment event rather than a configuration error.

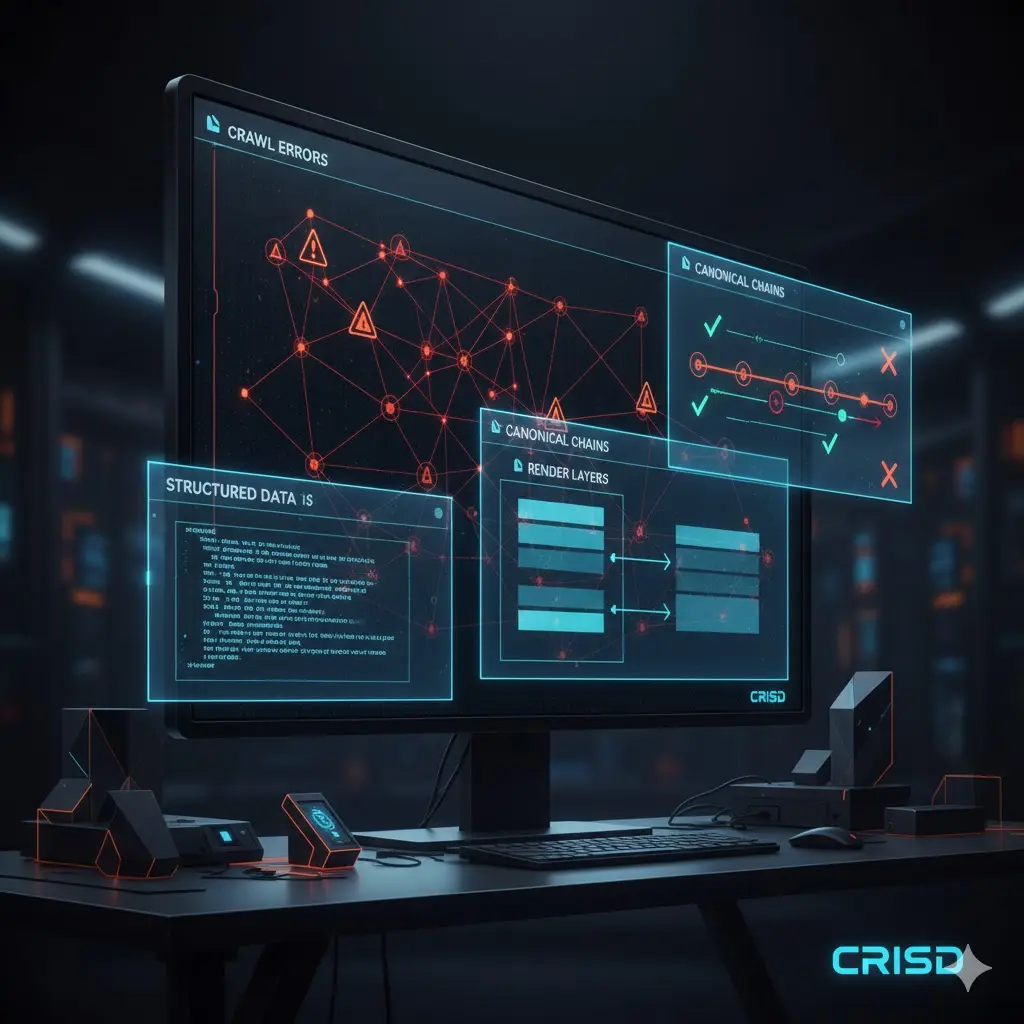

This guide takes a different approach. It introduces the CRISD Framework — a sequenced, five-layer diagnostic system for identifying, classifying, and resolving technical SEO implementation mistakes by root cause, not by surface signal. Every mistake covered here is tagged with its CRISD layer, its severity tier, and a recovery timeline block so you know what to fix first, how to confirm it worked, and how long to wait before escalating.

The CRISD Framework — Diagnosing Technical SEO Mistakes in the Right Sequence

The single most common mistake in technical SEO debugging is treating a downstream symptom as an upstream root cause. Rankings drop, and the immediate response is to audit content quality. Impressions fall, and the team starts examining metadata. Both may eventually be relevant, but neither addresses what is often the actual problem: a failure at a lower layer in the technical stack that is suppressing every fix applied above it.

The CRISD Framework imposes diagnostic sequencing. Each layer is a prerequisite for the one that follows it. Resolving issues at a higher layer without verifying the layer beneath it produces fixes that either do nothing or mask deeper problems temporarily.

The Five CRISD Layers

- C — Crawl: Can Googlebot discover and access the page? If crawl is blocked, nothing else in the framework matters.

- R — Render: Can Googlebot execute the page correctly and see the content as intended? JavaScript failures live here.

- I — Index: Is the page eligible for indexation, and is the correct version being indexed? Canonical conflicts, noindex tags, and hreflang errors all belong to this layer.

- S — Signal: Are ranking signals being correctly attributed to the right URL? Internal link equity, structured data integrity, and redirect chain health are Signal-layer concerns.

- D — Deploy: Are implementation mistakes being introduced at the deployment stage, resetting correctly configured settings with each release cycle? This is the most overlooked layer in all of technical SEO.

The framework is designed to be worked left to right. If a Crawl-layer issue exists, confirm it is fully resolved before investigating Render. If Render is clean, move to Index. This sequence eliminates the wasted effort of optimising signal attribution on pages that Googlebot cannot reach in the first place.

CRISD Layer Quick-Reference Table

| CRISD Layer | Primary Failure Type | P-Tier Range | Primary Detection Tool | GSC Report Anchor |

|---|---|---|---|---|

| C — Crawl | robots.txt blocks, crawl budget waste, orphaned pages, 5xx errors | P0 – P2 | Screaming Frog, GSC Coverage | Coverage → Excluded → Blocked by robots.txt |

| R — Render | JS rendering failures, hydration errors, INP regressions | P1 – P2 | GSC URL Inspection, Chrome DevTools | Core Web Vitals, URL Inspection → Rendered HTML |

| I — Index | Canonical conflicts, noindex misapplication, hreflang errors | P0 – P2 | GSC Coverage, Screaming Frog | Coverage → Excluded → Duplicate without canonical |

| S — Signal | Redirect chains, schema conflicts, internal link dilution | P2 – P3 | Ahrefs Site Audit, Rich Results Test | GSC Enhancements → Rich Results errors |

| D — Deploy | Staging directives pushed live, robots.txt overwrite, CDN stale serve | P0 – P1 | GSC URL Inspection, curl headers | Coverage → sudden noindex spike post-deploy |

P-Tier Severity Classification — Triaging Technical SEO Mistakes Before You Fix Anything

Not every technical SEO implementation mistake warrants emergency response. Treating a missing alt attribute with the same urgency as a sitewide noindex directive is how teams burn sprint capacity on low-impact work while genuine P0 issues accumulate. Before any fixing begins, every identified mistake needs a severity tier assigned to it.

The Four Severity Tiers

| Tier | Label | Definition | Scope | Discovery Lag | Response SLA |

|---|---|---|---|---|---|

| P0 | Site-Breaking | Prevents crawling or indexing across the entire site or major sections | Site-wide or large section | Minutes to hours | Immediate — fix before anything else |

| P1 | Indexation Impact | Pages crawled but not indexed, or wrong page version indexed | URL group or section | Days to weeks | Within 48 hours of discovery |

| P2 | Ranking Signal Degradation | Pages indexed but signals diluted, split, or misattributed | Page or cluster level | Weeks to months | Within current sprint |

| P3 | Signal Noise / UX | Suboptimal signals creating inefficiency, not active suppression | Individual pages | Often silent | Backlog and monitor |

One note on tier escalation: P-tier ratings are not static. A P2 mistake on a 5,000-page site can function as a P0 mistake on a site with 500,000 URLs. When a template-level error applies an incorrect directive across an entire page class, the scope multiplier elevates the severity regardless of the mistake’s intrinsic nature. Always factor URL scope into tier assignment, not just mistake type.

CRISD Layer 1 — Crawl: Technical SEO Implementation Mistakes That Block Discovery

Crawl is the foundation layer. Every subsequent diagnostic is irrelevant if Googlebot cannot reliably access the pages in question. Crawl-layer mistakes are disproportionately dangerous because they frequently produce no visible error in the CMS or server logs. The site appears functional to any human user. The problem only manifests in GSC data, and sometimes only after weeks of suppressed crawl activity.

If you are working through a site audit and are unsure where to begin, a comprehensive SEO audit tool can surface crawl-layer issues at scale before you invest time in signal-level diagnosis.

Mistake C1 — robots.txt Disallow Rules That Silently Block Critical Paths [P0]

A robots.txt misconfiguration is one of the fastest ways to suppress an entire site section without any deployment error, CMS alert, or server warning of any kind. The file is plain text, edited manually, and almost never subject to the same review process as template code or redirect configuration. That combination makes it uniquely hazardous.

The most damaging pattern is an overly broad Disallow rule written to block a specific subdirectory that inadvertently matches additional paths. A rule like Disallow: /en, intended to block a staging locale, will also block /enterprise, /engineering, and any other path beginning with those two characters. The site owner sees the intended block working. They do not see the unintended casualties.

Secondary failure mode: a Disallow: / entry left in the file after a development environment goes live. This is not hypothetical. It is one of the most documented causes of sudden sitewide deindexation, and it is entirely preventable with a post-deploy verification step.

A third pattern worth watching is conflicting directives across multiple User-agent blocks. When a file contains both a wildcard block and a Googlebot-specific block, the more specific block takes precedence — but only if it explicitly covers the paths in question. Misunderstanding this precedence logic leads to crawl states that behave differently for Googlebot than for other crawlers, creating GSC data that does not match log file observations.

Mistake C2 — Crawl Budget Misallocation on Large Sites [P1]

Crawl budget is a finite resource. Googlebot allocates crawl capacity based on site health signals, server response performance, and historical crawl demand. On sites with thousands of URLs, budget misallocation — spending crawl capacity on low-value or duplicate pages — directly reduces the crawl frequency of high-value content.

The most common sources of crawl budget waste are URL parameter proliferation (session IDs, tracking parameters, filter combinations generating unique URLs for identical content), infinite scroll or faceted navigation generating uncontrolled URL variants, and internal search result pages being accessible to crawlers. Each of these categories can generate thousands of low-value URLs that consume crawl allocation without contributing any indexation return.

The diagnostic signal for budget misallocation is a crawl frequency plateau on important pages. If GSC shows key templates being recrawled infrequently while the Coverage report shows high counts of “Crawled — currently not indexed” URLs, budget is almost certainly being absorbed by URL proliferation elsewhere on the site.

Mistake C3 — Orphaned Pages With Zero Internal Link Equity [P1]

Orphaned pages — those with no inbound internal links — receive crawl discovery only through XML sitemaps or direct URL submission. They do not receive equity signals from the rest of the site architecture. In practice, this means they are crawled less frequently, indexed with weaker signals, and excluded from the natural crawl chain that ties site authority together.

The pattern is most common after site migrations, when legacy URL structures are 301-redirected to new paths but internal links pointing to the old URLs are never updated. The new URLs receive inbound links from the redirects, but Googlebot still follows the redirect chain rather than treating the new URL as a native destination. Updating internal links directly to the canonical destination improves crawl efficiency and removes unnecessary redirect overhead from every crawl cycle.

Mistake C4 — Server Response Errors Creating Crawl Dead Zones (5xx Patterns) [P0]

A sustained 5xx error pattern on any URL Googlebot attempts to crawl reduces the crawl budget allocation for the affected site. This is documented behaviour: Google interprets repeated server errors as a signal to reduce crawl pressure, which means the error does not only suppress the failing URL — it suppresses crawl activity across the entire site until the error pattern stabilises.

Intermittent 500 errors during high-traffic periods are particularly dangerous because they do not appear consistently in crawl logs. A URL that returns 200 during manual testing but 500 under Googlebot’s crawl schedule will silently degrade crawl frequency without obvious evidence in standard monitoring.

The diagnostic approach requires log file analysis, not just GSC inspection. GSC averages crawl behaviour over time, which smooths out intermittent 5xx patterns. Log files show the raw error distribution and allow precise correlation between server load periods and Googlebot response codes.

Mistake C5 — Incorrect Crawl Directives on Paginated Sequences [P2]

After Google deprecated rel="next" and rel="prev" pagination signals, many sites were left without any coherent crawl strategy for paginated content. The replacement approaches — self-referencing canonicals on page 2+, noindexing pagination beyond page 1, or simply leaving pagination without directives — each carry implementation risks that are frequently handled incorrectly.

Noindexing paginated pages beyond the first is a legitimate strategy, but it must be paired with sufficient internal linking to the individual items within those pages. If page 2 through page N are noindexed and products or articles on those pages have no other inbound link path, those items become effectively orphaned from a crawl perspective. The content exists. Googlebot will not reliably find it.

Self-referencing canonicals on pagination (each page canonicalising to itself rather than to page 1) is the safest approach from an indexation standpoint but provides no crawl guidance. It requires that each paginated page be independently discoverable through internal linking and sitemap inclusion.

Crawl Layer Recovery Timeline

After implementing fixes for Crawl-layer technical SEO implementation mistakes, recovery does not happen immediately. Understanding realistic timelines prevents premature re-auditing and helps establish when escalation is warranted.

| Mistake | Expected Recrawl Window | Expected Index Change | GSC Confirmation Signal | Escalation Trigger |

|---|---|---|---|---|

| C1 — robots.txt block corrected | 24–72 hours for Googlebot to fetch updated file | 7–21 days for newly unblocked pages to appear in index | Coverage “Blocked by robots.txt” count drops to expected level | Count not declining after 14 days post-fix |

| C2 — Crawl budget waste eliminated | 2–4 weeks for crawl frequency to rebalance | 4–8 weeks for improved crawl frequency on target pages to reflect in coverage | Crawl stats report shows increased crawl on target templates | Crawl distribution unchanged after 30 days |

| C3 — Orphaned pages linked internally | Next crawl cycle following internal link addition (days to 1 week) | 2–4 weeks for newly discovered pages to enter index | “Discovered — currently not indexed” count declining | Pages still not indexed 30 days after internal links added |

| C4 — 5xx errors resolved | Googlebot resumes normal crawl rate within 1–7 days of consistent 200 responses | 3–6 weeks for crawl budget normalisation site-wide | Crawl stats report: crawl requests increasing, crawl errors trending to zero | Crawl rate still suppressed 14 days after 5xx errors fully resolved |

| C5 — Pagination directives corrected | Next crawl cycle (3–14 days depending on crawl frequency) | 2–6 weeks depending on page depth and crawl rate | Coverage report: previously excluded pagination pages either indexed or correctly excluded per strategy | Items within pagination still not indexed 45 days post-fix |

One important note on robots.txt corrections specifically: requesting a recrawl via GSC URL Inspection for individual pages does not accelerate robots.txt re-evaluation. Google must independently re-fetch the robots.txt file itself. Manual URL inspection requests will show the page as accessible, but this reflects the corrected directive — it does not queue the page for immediate indexation.

Crawl Layer — Tool-Specific Diagnostic Sequences

Each diagnostic sequence below maps a specific Crawl-layer mistake to the exact tool, report, and configuration required to surface it. These are not general tool recommendations — they are step-by-step detection workflows.

Screaming Frog — Crawl Layer Sequences

Detecting C1 (robots.txt blocks): Run a full Screaming Frog crawl with “Respect robots.txt” disabled. Export the Blocked by robots.txt report from the Bulk Export menu. Cross-reference against GSC Coverage → Excluded → Blocked by robots.txt. Any URL appearing in both is confirmed as blocked and discoverable. Sort by estimated organic traffic if Screaming Frog is connected to GSC to prioritise high-value blocked pages first.

Detecting C2 (crawl budget waste): Enable Log File Importer in Screaming Frog and import Googlebot access logs. Navigate to Log Summary → URLs crawled by Googlebot → sort by Crawl Count. Segment by URL template type using custom extraction to identify which template classes are consuming the highest crawl frequency relative to their indexed value. Any template with high crawl frequency and low GSC impressions is a budget waste candidate.

Detecting C3 (orphaned pages): Run a standard crawl. Navigate to Bulk Export → All Inlinks. Filter the Inlinks tab for pages with zero inbound internal links from HTML sources (exclude sitemaps from the count if you want to identify structurally orphaned pages that are only discoverable via XML). Export and cross-reference against GSC Coverage to identify which orphaned pages are currently indexed versus excluded.

Google Search Console — Crawl Layer Sequences

C1 verification: Coverage → Excluded → “Blocked by robots.txt.” Monitor the count over 14-day windows post-fix. A declining trend confirms Googlebot is re-fetching the corrected file. A static or growing count suggests the fix has not propagated, or a secondary robots.txt entry is still blocking the affected paths.

C4 monitoring (5xx error resolution): Settings → Crawl Stats → By Response. Filter by Server errors (5xx). Plot the error volume over the 30 days preceding and following the server-side fix. A clean recovery shows a hard drop in 5xx volume followed by a gradual increase in crawl requests over the subsequent two to three weeks as Googlebot re-establishes normal crawl pressure on the site.

C2 crawl budget confirmation: Settings → Crawl Stats → By Page Type (if using structured URL patterns) or by Googlebot agent. Compare crawl activity distribution before and after parameter blocking or noindex implementation on low-value URL classes. The target outcome is a measurable shift in crawl volume toward high-value templates within four to six weeks.

Ahrefs Site Audit — Crawl Layer Sequences

C3 orphaned page detection: Run a full site audit in Ahrefs. Navigate to Internal Pages → filter by “Incoming internal links: 0.” This surfaces all pages discoverable to the Ahrefs bot that have no internal link inbound path. Cross-reference with GSC indexed page counts. Pages appearing in this filter that are currently ranking for any impressions are at particular risk — they are indexed despite structural isolation, which means a crawl budget reduction event could push them into “Discovered — currently not indexed” status without any content change triggering the exclusion.

C5 pagination audit: Ahrefs Site Audit → Page Explorer → filter URL contains “page=” or “?p=” or similar pagination parameter pattern used on the target site. Review the canonical tag and meta robots values for each paginated URL. Inconsistencies across the paginated sequence — some pages self-canonicalising, others pointing to page 1, others noindexed — indicate an implementation-level inconsistency that needs standardising before any pagination strategy can be considered functional.

A structured content cluster analysis can also help identify crawl architecture gaps between topic hubs and their supporting pages, which frequently reveals orphaned or underlinked content within thematically organised site sections.

CRISD Layer 2 — Render: Technical SEO Implementation Mistakes in JavaScript Execution and Page Rendering

Confirming that Googlebot can reach a page is step one. Confirming it sees the right content once it arrives is an entirely different problem. Render-layer failures are easy to miss precisely because the site looks correct to every human visitor and returns clean server logs. The failure only surfaces when you compare what Google’s Web Rendering Service actually captured against what your server intended to deliver — and the two don’t match in ways that cost rankings.

Mistake R1 — Client-Side Rendering Hiding Critical Content From Googlebot (P1)

What goes wrong: Client-side rendering (CSR) assembles page content in the browser after JavaScript executes. Googlebot’s Web Rendering Service does process JavaScript, but it operates in a queued, resource-constrained environment. Pages are often crawled first in their unrendered state — a near-empty HTML shell — and added to a rendering queue for deferred processing. That queue lag can stretch from hours to weeks on sites with high URL volume.

Why it happens: React, Vue, and Angular applications built for speed and developer experience often make no distinction between content that carries SEO weight and content that doesn’t. Everything gets assembled client-side by default. The fact that Googlebot can eventually render it creates a false sense of security — “eventually” isn’t the same as “reliably and on time.”

How to detect it: Open GSC URL Inspection for any key page. Select “View Tested Page” → “HTML” and compare the rendered DOM output against the raw HTTP response from your server (curl or view-source). If headings, body copy, internal links, or JSON-LD schema present in the browser are absent from the initial server response, you have a CSR indexation exposure. You can also run an AI-powered content analysis to identify which content elements are missing from the served HTML across multiple pages at once.

How to fix it: For pages carrying primary SEO value — category pages, product pages, pillar content — implement server-side rendering (SSR) or static generation so critical content is present in the initial HTTP response. Frameworks like Next.js and Nuxt offer this at the route level, allowing CSR to remain for interactive components while SEO-critical content is pre-rendered. Hybrid rendering is a valid middle ground; full CSR for landing pages that matter is not.

Mistake R2 — JavaScript-Deferred Navigation Blocking Crawl Path Discovery (P1)

What goes wrong: Internal links rendered via JavaScript — navigation menus built with JS frameworks, “load more” pagination, anchor tags appended by event handlers — may not be present during Googlebot’s initial crawl pass. If rendering hasn’t occurred, Googlebot may reach the homepage and fail to discover any interior pages whose links only appear after script execution. The site architecture exists visually. Structurally, it’s invisible to the crawler.

Why it happens: Developers building component-based navigation don’t always consider crawl dependency. A React navigation bar that renders perfectly in a browser and passes every accessibility audit may still generate anchor tags exclusively at runtime. Nobody flags this during QA because the QA process doesn’t run without JavaScript.

How to detect it: Run two separate Screaming Frog crawls of the same site — one with JavaScript rendering enabled (using the built-in Chromium renderer), one with it disabled. Export the internal links report from each. Any links present in the JS-enabled crawl but absent in the JS-disabled crawl are JavaScript-dependent. If key navigation paths only appear in the JS-on version, your internal link architecture is partially invisible to crawlers operating without full rendering.

How to fix it: Ensure all primary navigation — header nav, footer links, breadcrumbs, category and pagination links — is rendered in the server-delivered HTML. Interactive elements like dropdowns or mega-menus can still use JavaScript for behaviour; the underlying anchor tags must be present in the DOM before any JavaScript executes. For SPAs where this isn’t feasible without major refactoring, an XML sitemap covering all critical interior URLs is a minimum mitigation, not a complete fix.

Mistake R3 — Hydration Errors Causing Rendered vs. Served Content Mismatch (P1)

What goes wrong: Frameworks using server-side rendering with client-side hydration — Next.js, Nuxt, SvelteKit — send pre-rendered HTML from the server, then re-attach JavaScript interactivity client-side. When the server output and client output don’t match exactly, hydration fails. The result can range from visual glitches to complete DOM replacement. Googlebot, processing the page at an indeterminate point in this cycle, may capture a partially assembled or corrupted content state.

Why it happens: Hydration mismatches are most often caused by content that differs between server and client contexts: dates formatted differently depending on timezone, user-specific data injected server-side, randomised content (like “featured” or “recommended” blocks), or conditional rendering logic that evaluates differently in Node versus browser environments. Even a mismatched whitespace node can trigger cascading hydration failure in strict-mode React.

How to detect it: Load the affected page in Chrome with DevTools Console open. Hydration errors in React, Next.js, and Nuxt produce explicit console warnings identifying the mismatched node. These are deterministic — they reproduce on every load. Cross-reference by running GSC URL Inspection and comparing the “Rendered HTML” output against your server’s raw HTTP response. Meaningful structural differences between the two confirm a rendering pipeline failure. For schema-bearing pages, also validate whether your JSON-LD structured data survives the hydration cycle intact — schema injected server-side can be lost or duplicated when the client-side hydration phase replaces DOM nodes.

How to fix it: Eliminate all content that differs between server and client render contexts. Move user-specific, time-sensitive, or randomised content into client-only components using dynamic imports with ssr: false (Nuxt) or useEffect-mounted components (React/Next.js). This keeps the server-rendered shell consistent and stable, limiting hydration to purely interactive layer attachment rather than content reconciliation.

Mistake R4 — INP Regression After Dependency Updates or Third-Party Script Injection (P2)

What goes wrong: On March 12, 2024, Interaction to Next Paint (INP) replaced First Input Delay (FID) as a Core Web Vitals metric. FID measured only the delay before the browser first responded to input. INP measures the full duration of every interaction across the page’s lifetime and reports the worst case. Sites that passed CWV under FID thresholds can now fail under INP without deploying a single line of new code — simply because the measurement standard is stricter.

Why it happens: INP regressions after the March 2024 transition are most commonly caused by accumulated third-party script weight. Each marketing tag added through GTM — analytics, chat, heat mapping, A/B testing, ad pixels — increases the JavaScript execution payload on the main thread. These additions bypass the development review process entirely. Over time, their cumulative weight drives long tasks that push INP above the 200ms acceptable threshold with no identifiable single cause.

How to detect it: Open Chrome DevTools → Performance tab. Record a representative page interaction — a button click, form field input, or accordion toggle that users commonly perform. Expand the long task timeline after recording. Any task blocking the main thread for over 50ms contributes to INP. The INP Attribution API (available in Chrome 116+) can also be queried in the console during a live session to capture which specific interaction produces the worst score and which script source is responsible.

How to fix it: Audit third-party scripts via a tag manager review — remove any tags that are unused, duplicated, or no longer serving an active purpose. For scripts that must remain, move them from blocking to deferred loading using the async or defer attribute. For long tasks originating in first-party code, break them into smaller non-blocking chunks using scheduler.yield() or setTimeout decomposition. Retest in field data after 28 days — lab scores improve immediately; CrUX field data reflects real-user conditions on a rolling window.

Mistake R5 — Render-Blocking Resources Elevating LCP Beyond Threshold (P2)

What goes wrong: Largest Contentful Paint (LCP) measures how quickly the largest visible element — typically a hero image, H1, or above-fold content block — loads and becomes visible. The acceptable threshold is 2.5 seconds. CSS and JavaScript files loaded synchronously in the document <head> block rendering: the browser cannot paint anything until those files are fetched, parsed, and executed. Pages with large blocking resource chains inflate LCP significantly regardless of server response speed.

Why it happens: The root cause is rarely a single oversized file. It is almost always an ordering problem. Scripts that don’t need to execute before first paint — analytics initialisation, font fallback logic, UI framework bootstrapping for below-fold components — are placed in the document head alongside critical CSS. Nobody audits the <head> for performance impact during normal development. Resources accumulate over time without any single addition causing an obvious regression.

How to detect it: Run a WebPageTest test from a representative geographic location using a realistic device profile (mid-range Android on 4G, not a desktop on fibre). Open the waterfall view. Resources loading before “Start Render” that are not strictly required for initial content display are blocking candidates. The filmstrip view pinpoints exactly when the LCP element becomes visible — a wide gap between “Start Render” and “LCP” confirms a specific large element is the constraint, not just generic blocking overhead.

How to fix it: Add defer to non-critical JavaScript files so they load after HTML parsing completes. Move analytics and tag manager scripts below critical CSS in the document head, or load them via async. Preload the LCP image or font using <link rel="preload"> to start fetching it earlier in the resource waterfall. Eliminate unused CSS by auditing stylesheets against the rendered page — coverage tooling in Chrome DevTools shows the exact percentage of each stylesheet actually used on initial render.

Mistake R6 — Dynamic Content Injected After Initial Load Not Captured in Index (P1)

What goes wrong: Content loaded via AJAX after page load, content conditional on user interaction, and content behind lazy-load triggers that require scroll or click events will not be present when Googlebot processes the page. This is distinct from standard CSR — R6 describes content that is deliberately deferred until a trigger Googlebot never replicates. The page structure renders. The content that makes it valuable does not.

Why it happens: The pattern is most common in ecommerce and media platforms optimised for perceived performance. Product attributes fetched from a separate API endpoint after initial shell render, review counts loaded asynchronously, pricing data populated by a client-side call — each of these choices improves Time to First Byte metrics while silently removing ranking-relevant content from the indexed version of the page. The performance team optimises the loading pattern. Nobody audits the SEO consequence.

How to detect it: Use GSC URL Inspection → “View Tested Page” → “HTML” and search for specific content strings you expect to be on the page: product name, review count, price, or a body copy sentence. If they’re absent from the rendered HTML output but visible in the browser, they’re being loaded after Googlebot’s render window closes. Run the same check using curl — curl -s [URL] | grep "[expected string]" — to confirm what the server actually sends before any JavaScript executes.

How to fix it: Move primary content attributes into the server-rendered HTML response. For product data specifically, embed core attributes — name, description, price, availability — in the initial SSR output rather than fetching them client-side post-load. If the architecture requires client-side fetching for performance reasons (real-time inventory, personalised pricing), ensure the static content version is pre-rendered with representative or fallback values that give Googlebot meaningful content to index. Dynamic personalisation and SEO indexation are separate concerns — serve the indexable version to crawlers, the personalised version to authenticated users.

Render Layer Recovery Timeline

Render-layer fixes involve two sequential steps before results appear: Googlebot must first recrawl the page, then pass it through the Web Rendering Service queue for re-processing. These two events don’t happen simultaneously. On large sites the rendering queue adds meaningful lag on top of the recrawl interval. Core Web Vitals fixes add a further wrinkle — lab scores (Lighthouse, PageSpeed Insights) update immediately after deployment, but field data from Chrome User Experience Report (CrUX) operates on a 28-day rolling window. A page can show green in Lighthouse and still fail in GSC’s Core Web Vitals report for nearly a month after a legitimate fix.

| Mistake | Expected Recrawl Window | Expected Index Reflection | Verification Signal | Escalation Trigger |

|---|---|---|---|---|

| R1 — CSR content moved to SSR | 1–7 days | 2–4 weeks | GSC URL Inspection: rendered HTML now contains target content in initial DOM | Rendered HTML still empty or partial after 14 days |

| R2 — JS-deferred nav replaced with HTML links | 1–3 days (homepage); 1–2 weeks (interior pages) | 2–6 weeks for newly discoverable pages to be indexed | Screaming Frog JS-off crawl discovers same navigation links as JS-on crawl | Interior pages still not indexed 30 days after link fix |

| R3 — Hydration errors resolved | 1–7 days | 2–4 weeks | GSC URL Inspection: rendered HTML matches server response; no console hydration errors | Persistent mismatch between rendered HTML and server response after 21 days |

| R4 — INP regression fixed | Immediate in lab; 28-day CrUX rolling window for field data | CWV status updates 28–35 days after consistent field improvement | GSC Core Web Vitals report: “Poor” URL count declining week over week | Field data not improving 6 weeks post-fix despite confirmed lab score improvement |

| R5 — Render-blocking resources removed | Immediate in lab; 28-day CrUX rolling window for field data | CWV pass/fail status updates within 28–35 days | WebPageTest waterfall: blocking resources removed from pre-render path; LCP element loading earlier | Field LCP still “Poor” 35 days after fix with confirmed lab improvement |

| R6 — Dynamic content moved to initial HTML | 1–7 days | 2–4 weeks for newly visible content to affect ranking signals | GSC URL Inspection rendered HTML and curl response both contain target content strings | Content still absent from rendered output 14 days after server-side fix confirmed |

Render Layer — Tool-Specific Diagnostic Sequences

Google Search Console URL Inspection (Rendered vs. Served)

URL Inspection is the most direct window into what Googlebot actually sees. For any render-layer investigation, open URL Inspection on the target page and run “Test Live URL.” Once complete, select “View Tested Page” and open both the “Screenshot” tab and the “HTML” tab.

The screenshot shows what Googlebot’s renderer visually captured. The HTML tab shows the full rendered DOM after JavaScript execution. Compare this against the raw server response by running curl -s [URL] in your terminal or using view-source in Chrome before JavaScript executes. Any content, heading, link, or structured data present in the rendered HTML but absent in the server response is JavaScript-dependent and at risk during Googlebot’s crawl-first pass.

For pages carrying schema markup, cross-reference the rendered HTML output against your expected JSON-LD. Schema blocks injected or modified by client-side scripts may be present in the rendered view but absent from what the server delivers — making them unreliable for rich result eligibility. If you’re auditing schema integrity across rendered pages at scale, an AI-assisted content analysis workflow can identify discrepancies between served and rendered content across an entire URL set faster than manual URL-by-URL inspection.

Run URL Inspection checks immediately after any deployment that touches template-level rendering logic, JavaScript bundles, or SSR/hydration configuration. Don’t wait for GSC to surface a coverage anomaly — that lag can cost weeks of ranking stability.

Screaming Frog (JS-On vs. JS-Off Delta Crawl)

The JS-on versus JS-off delta crawl is the most reliable method for identifying render-dependent content and navigation at scale. The process requires two separate Screaming Frog crawls of the same site.

First crawl: Configuration → Spider → Rendering → set to “JavaScript.” This uses Screaming Frog’s built-in Chromium renderer to execute JavaScript before collecting page data. Second crawl: set Rendering to “None.” This crawls the raw HTTP response only, replicating what Googlebot sees before any JavaScript executes.

After both crawls complete, export the Internal Links report from each. Import both CSVs into a spreadsheet and perform a VLOOKUP or MATCH comparison to isolate links present in the JS-on export but absent from the JS-off export. These are your render-dependent links. Prioritise by page depth and link equity value — navigation links at depth 1 missing from the JS-off crawl are P1 incidents. Blog pagination links missing from JS-off are P2.

Also compare H1 content, meta descriptions, and body word counts between the two crawls for key landing pages. Significant word count differences between JS-on and JS-off on the same URL confirm that body content is JavaScript-dependent — a direct R1 finding that needs SSR remediation. This delta is also worth cross-referencing against your topic cluster architecture to confirm that supporting cluster pages aren’t structurally isolated by JS-only linking patterns.

Chrome DevTools (INP / Long Tasks)

For INP diagnosis, open the page in Chrome with DevTools open. Navigate to the Performance tab and click the record button. Perform the interaction you want to measure — a button click, a form field focus, a filter selection — then stop recording after the interaction completes and the page responds.

In the performance timeline, expand the “Main” thread track. Long tasks — JavaScript executions blocking the main thread for over 50ms — appear as red-flagged blocks. Click any long task to see its call stack in the Summary panel below. The call stack identifies which specific function, script file, or third-party library is responsible for the blocking execution. If a third-party script (GTM, analytics, chat widget) appears consistently at the top of long task call stacks, that script is your primary INP contributor.

For a more automated capture, paste this snippet into the console on the target page and perform interactions for 30–60 seconds:

const observer = new PerformanceObserver((list) => {

list.getEntries().forEach((entry) => {

if (entry.entryType === 'event' && entry.duration > 200) {

console.log('Slow interaction:', entry.name, Math.round(entry.duration) + 'ms', entry.target);

}

});

});

observer.observe({ type: 'event', buffered: true, durationThreshold: 200 });Any interaction logging above 200ms is an INP failure. The entry.target output identifies the DOM element responsible, pointing directly at the component or third-party widget causing the regression. Retest after removing or deferring the offending script — but remember that the fix will only appear in GSC’s Core Web Vitals field data after the 28-day CrUX rolling window accumulates sufficient improved real-user measurements.

CRISD Layer 3 — Index: Technical SEO Implementation Mistakes That Prevent or Corrupt Indexation

Crawl confirms access. Render confirms visibility. Index is where things get genuinely complicated — because failures here produce no server error, no visual anomaly, and often no immediate GSC alert. Pages can be crawled correctly, rendered completely, and still end up in the wrong index state, serving the wrong URL, or not indexed at all. The mechanism is almost always directive logic: canonical tags, noindex rules, hreflang annotations, and parameter handling that either conflicts with itself or applies to a scope far wider than intended.

What makes Index-layer failures particularly costly is their silent compounding. A canonical chain misconfigured during a migration doesn’t trigger an alert — it quietly redirects link equity away from the intended URL for months. A template-level noindex applied to the wrong page class can suppress hundreds of URLs before anyone notices the coverage drop. Unlike a robots.txt block or a 5xx error, these failures look like normal operation from every angle except the one that matters: whether Google is indexing the right content at the right URL.

Every mistake in this layer has one of two failure modes: the page is excluded from the index when it should be included, or the wrong version of the page is indexed when a specific version should be preferred. Both outcomes damage organic performance — one through suppression, one through signal fragmentation. The diagnostic approach differs for each, which is why working through this layer systematically matters more than running a single coverage audit and calling it clean.

Mistake I1 — Canonical Chains Diluting Preferred URL Signals (P1)

What goes wrong: A canonical tag is a declaration of preferred URL. When that declaration points to a page that is itself non-canonical — redirected, noindexed, or carrying its own canonical to a third URL — the result is a canonical chain. Google generally attempts to resolve these chains, but it does so by substituting its own judgment for your declared preference. The URL it selects may not be the one you intended, your backlink equity consolidation assumptions break down, and the preferred page often ranks below what it should.

Why it happens: Canonical chains are almost always a migration artefact. The redirect map is implemented correctly — old URLs 301 to new destinations — but the on-page canonical tags are not updated simultaneously. Legacy canonicals continue pointing to the old URL structure after the redirect is in place, creating a situation where the canonical chain and the redirect chain run in parallel, both pointing to outdated destinations. The further a migration is from someone’s current workload, the less likely this ever gets cleaned up.

How to detect it: In Screaming Frog, run a full crawl and navigate to Bulk Export → Canonicals → “Non-Indexable Canonical.” This report surfaces every page whose declared canonical points to a URL that is itself non-indexable: redirected, noindexed, or returning an error. Every entry represents a broken chain. Supplement this with GSC URL Inspection on affected high-value pages — the “Google-selected canonical” field will show whether Google has already overridden your declared preference. When the two differ, Google has rejected your signal.

How to fix it: Every canonical tag must reference the final destination URL directly, with zero intermediate redirects in the chain. There are no shortcuts here — if you have 400 pages with stale canonicals pointing through a redirect, all 400 need updating. Post-migration, the canonical audit should run before the redirect implementation is considered complete, not as a separate cleanup task weeks later. Run a Screaming Frog crawl with both “Follow Canonicals” and “Follow Redirects” enabled, then cross-reference the final resolved URLs against the declared canonical tags to confirm alignment.

Mistake I2 — Template-Level noindex Deployment Errors (P0)

What goes wrong: A noindex directive removes a page from Google’s index after the next crawl. Applied to a single page, this is a deliberate and useful tool. Applied at the template level — through a CMS setting, a plugin misconfiguration, or an HTTP response header — it becomes a P0 incident capable of suppressing entire site sections before anyone notices. The mechanism can be a meta robots tag in the template, an X-Robots-Tag header injected by a plugin or CDN rule, or a CMS-level toggle affecting all pages of a given type.

Why it happens: The most common trigger is a deployment that carries staging-environment configuration into production. During development, sites are typically set to noindex sitewide to prevent premature crawling. That directive is supposed to be removed before launch. When it isn’t — or when it’s removed from the HTML but not from a CDN-layer header rule that was added separately — the site goes live in a state that actively prevents indexation. A close second is CMS plugin settings where a “noindex” checkbox at the category or post-type level gets accidentally toggled, affecting every page in that class simultaneously.

How to detect it: Check both layers independently. In page source or with Screaming Frog, confirm whether a <meta name="robots" content="noindex"> tag is present. Then run curl -I [URL] to inspect HTTP response headers for an X-Robots-Tag: noindex directive — this is invisible in page source and will not appear in a standard HTML crawl unless you explicitly extract response headers. In GSC, Coverage → Excluded → “Excluded by ‘noindex’ tag” shows the count and a sample of affected URLs. A sudden spike in this count that correlates with a deployment date is a template-level event until proven otherwise.

How to fix it: Remove the directive from both layers — on-page meta tag and HTTP response header — then submit affected URLs for recrawl via GSC URL Inspection. For high-volume page classes, use the Sitemaps report to resubmit the sitemap containing the affected URLs, which signals to Googlebot that these pages need re-evaluation. Do not rely on organic recrawl timing for P0 incidents; actively queue priority pages. After the fix, implement a post-deploy verification step that programmatically checks robots directives on a representative URL from every major template type before any deployment is marked complete.

Mistake I3 — hreflang Return Tag and Locale Code Failures (P1)

What goes wrong: hreflang tells Google which language or regional version of a page to serve to users in specific locales. When implemented incorrectly, it doesn’t produce a neutral no-signal state — it actively creates indexation conflicts. Google may index the wrong language variant for a region, serve mismatched content to users, or treat correctly differentiated regional pages as duplicate content and consolidate them, destroying the entire purpose of having locale-specific pages.

Why it happens: Two failure modes dominate. First, the missing return tag: every hreflang annotation requires a reciprocal annotation on the referenced page. If the en-US page references the en-GB page, the en-GB page must carry a matching hreflang tag pointing back to en-US. Without this, Google considers the annotation set incomplete and may disregard the entire implementation. Second, malformed locale codes: en-UK instead of en-GB, zh instead of zh-Hans or zh-Hant for Chinese variants, or using country codes without language prefixes. There is no tolerance for approximation in locale code syntax — malformed codes are silently ignored.

How to detect it: In Ahrefs Site Audit, navigate to Localisation → hreflang. The report segments errors by type: missing return tags, incorrect locale codes, referenced URLs returning non-200 status codes, and self-referencing hreflang tags that provide no locale signal. Prioritise “missing return tag” errors first — they are the most prevalent cause of complete hreflang implementation failure. For manual verification of specific page sets, the hreflang Testing Tool at hreflang.org or Aleyda Solis’s hreflang validator provides per-URL annotation verification with annotated error identification.

How to fix it: Correct locale codes to ISO 639-1 (language) + ISO 3166-1 Alpha-2 (region) format without exception. Ensure every referenced URL in an hreflang annotation carries a reciprocal tag — this is a systems problem, not a page-by-page editing task. If your CMS generates hreflang automatically, audit the generation logic against the full list of supported locale combinations rather than spot-checking individual pages. For sites with large URL volumes, add hreflang validation to your CI/CD pipeline or post-deploy audit workflow so annotation drift is caught before it compounds across thousands of URLs.

Mistake I4 — URL Parameter Proliferation Creating Indexation Debt (P2)

What goes wrong: Tracking parameters, session identifiers, sort and filter combinations, currency selectors, and referral tokens can each generate unique URLs for identical or near-identical content. Left unmanaged, these parameters create indexation debt: Google crawls and indexes multiple URL variants for the same page, splits whatever link equity exists between them, and has no reliable signal for which version represents the canonical. The actual content pages get weaker signals than they should because the available equity is distributed across dozens of parameter variants nobody intended to create.

Why it happens: Parameters accumulate from multiple independent systems — analytics platforms append UTM parameters, session management systems add SIDs, faceted navigation generates filter URL combinations, and affiliate platforms inject tracking tokens. Each system operates independently without knowledge of what the others are doing. A product page URL can accumulate six or seven parameter variants from different source systems, all of which crawlers discover through internal links and external referrals, and all of which end up in the crawl queue as distinct URLs.

How to detect it: In GSC, Coverage → Excluded → “Duplicate without user-selected canonical” and “Duplicate, Google chose different canonical than user” indicate parameter proliferation at scale. A high count in the second report is particularly diagnostic: it shows cases where Google has overridden your declared canonical preference, which usually means the parameter variant carries stronger signals than the intended canonical — often because more external links point to the parameter URL than to the clean version. In Screaming Frog, filter URLs by query string presence and segment by parameter key to quantify the volume and identify the primary parameter sources.

How to fix it: Implement canonical tags on all parameter variants pointing to the clean URL. For tracking parameters that should never produce indexable URLs, add them to the list of parameters Google should ignore — this can be configured in GSC under Legacy Tools → URL Parameters, though this tool has limited reliability and canonical implementation is the more robust solution. For ecommerce filter combinations that represent genuine search demand, make the indexation decision deliberately: facets with volume get dedicated pages with proper canonical signals; facets without volume canonicalise to the category root. Do not leave this to Google’s interpretation.

Mistake I5 — Canonical Conflicts Between HTTP Header and On-Page Tags (P1)

What goes wrong: A page can carry two simultaneous canonical declarations: an on-page <link rel="canonical"> in the HTML head, and a canonical URL specified via a Link HTTP response header. When these two declarations point to different URLs, Google resolves the conflict by applying the HTTP header as the authoritative signal. The on-page canonical is overridden silently. Every page appears to carry correct canonical tags when viewed in source. Every page is actually serving a different canonical signal at the HTTP layer that takes precedence over what the HTML declares.

Why it happens: This failure is almost always introduced by infrastructure changes rather than content changes. CDN configuration updates, edge caching layer deployments, reverse proxy implementations, and server-side header injection rules can all add Link response headers carrying canonical URLs without any corresponding update to the on-page implementation. The change is invisible to anyone auditing page source. It is invisible in Screaming Frog’s default crawl output unless header extraction is explicitly configured. It goes undetected until a canonical audit includes HTTP response header inspection.

How to detect it: Run curl -I [URL] on representative pages across key templates and look for a Link: <[URL]>; rel="canonical" header in the response. Any URL appearing in this header that differs from the on-page canonical tag is a conflict. In Screaming Frog, go to Configuration → Spider → Response Headers and enable extraction of the Link header. After crawling, export both the Canonicals report and the Response Headers report and cross-reference canonical URLs between the two sources. Mismatches at scale indicate a CDN or server configuration generating incorrect header-level canonicals across page classes.

How to fix it: The header-level canonical must be removed or corrected at its source — the CDN configuration, reverse proxy rule, or server header injection logic that’s generating it. Fixing the on-page tag alone does nothing while the header override remains active. After the infrastructure fix, flush the CDN cache and re-verify with curl -I before treating the issue as resolved. Add canonical header inspection to post-deploy verification for any infrastructure change that touches CDN configuration, edge rules, or response header injection. This failure type is specific enough that it rarely surfaces through standard crawl audits — it requires deliberate header inspection to catch.

Mistake I6 — Thin Programmatic Pages Consuming Index Budget (P2)

What goes wrong: Large sites with programmatically generated pages frequently produce sections where identical or near-identical templates render with minimal content differentiation. Author archive pages duplicating post listings, tag pages mirroring category content, store locator pages sharing templated copy with only address data varying, thin FAQ pages generated from a database with one sentence per answer — all of these patterns create indexed URLs that contribute no ranking value while consuming crawl budget, diluting topical authority signals, and creating duplicate content exposure across the affected page class.

Why it happens: Programmatic page generation is fast and scales by design. The SEO implications of what gets generated are almost never part of the initial specification. A CMS that can automatically create a tag archive page for every tag applied to every post will do exactly that — generating hundreds or thousands of low-content URLs from normal editorial activity. Nobody audits these at creation time because the CMS handles it automatically. By the time the index debt becomes visible in a coverage report, the affected URL count can be enormous.

How to detect it: In Ahrefs Site Audit, use Page Explorer to filter by URL pattern matching the suspected template type (e.g., /tag/, /author/, /location/). Sort by word count ascending to identify the thinnest pages in each template class. Cross-reference against GSC impressions data — any URL in the thin page set generating zero impressions over a rolling six-month window with no meaningful internal link value is a strong removal candidate. In Screaming Frog, the Word Count column in the bulk export quantifies content volume per URL, enabling quick identification of template classes where average word count is below a meaningful threshold.

How to fix it: The correct approach depends on whether the affected pages can be developed into meaningfully differentiated content. If they can — author pages that could include genuine author bios, editorial perspectives, and curated content selections — invest in the content development and retain them in the index. If they cannot, noindex is cleaner than canonical consolidation. Canonicalising thin tag pages to the homepage or to a parent category misrepresents the content relationship and is likely to be overridden by Google anyway. A clean noindex, combined with ensuring the pages are still crawlable so Googlebot can see the directive, removes them from the indexation budget without breaking the site structure.

Index Layer — Tool-Specific Diagnostic Sequences

Screaming Frog — Index Layer

Canonical chain detection (I1): Run a full crawl with both “Follow Canonicals” and “Follow Redirects” enabled under Configuration → Spider → Advanced. After crawling, go to Bulk Export → Canonicals → “Non-Indexable Canonical.” This surfaces every page whose declared canonical points to a non-indexable URL. Export the full list, then in a separate column resolve the canonical URL through any redirect chain to its final destination. Any page where the declared canonical and the resolved final URL differ requires a canonical tag update to the final destination.

noindex scope audit (I2): In the main crawl interface, use the filter Indexability → “Non-Indexable” to isolate all non-indexable URLs. Cross-reference this list against the site’s intended URL inventory. Any page in the non-indexable list that should be indexed is an immediate escalation. Use the “Directives” tab in the page detail view to confirm whether the noindex source is a meta tag, an HTTP header, or a canonical-induced exclusion — the remediation differs depending on the source.

HTTP header canonical extraction (I5): Before crawling, go to Configuration → Spider → Response Headers. Add a custom header extraction rule for the Link header. After the crawl completes, export both the Canonicals report and the Response Headers report. In a spreadsheet, VLOOKUP the two datasets on URL. Any row where the canonical URL in the HTML differs from the canonical URL in the Link response header is a conflict requiring infrastructure-level investigation.

Thin page identification (I6): After crawling, sort the main crawl output by Word Count ascending. Filter by URL pattern to isolate specific template types. Pages with word counts below 200 in content-bearing template classes warrant manual review. Export the low word count URLs alongside their inbound internal link counts and cross-reference with GSC impressions — the combination of thin content, minimal internal links, and zero search impression data is the strongest indicator that noindex is the appropriate resolution.

Google Search Console — Index Layer

Canonical override detection (I1, I5): In URL Inspection, the “Google-selected canonical” field shows which URL Google has chosen as the preferred version. The “User-declared canonical” field shows what your implementation declares. When these differ, Google has overridden your preference — the reason is almost always a signal mismatch, a canonical chain, or a header-level conflict. Document every URL where these fields diverge as a priority investigation target.

noindex monitoring (I2): Coverage → Excluded → “Excluded by ‘noindex’ tag.” Track this count weekly using a GSC data export or a connected reporting tool. Establish a baseline for your site’s expected noindex count. Any increase above that baseline that correlates temporally with a deployment should be treated as a template-level deployment error until the root cause is confirmed. Do not begin content quality investigation until the directive source has been ruled out.

Duplicate and parameter indexation (I4): Coverage → Excluded → “Duplicate without user-selected canonical” shows pages Google has identified as duplicate content without a canonical signal from you. “Duplicate, Google chose different canonical than user” shows cases where your canonical declaration was overridden. High counts in either report require parameter audit. For the second report specifically, identify which URL Google chose as canonical — if it’s a parameter variant rather than the clean URL, external links are pointing to the parameter version and canonicalisation alone won’t resolve the equity split.

hreflang verification (I3): GSC does not provide a dedicated hreflang error report in the current interface, but International Targeting (under Legacy Tools and Reports) shows hreflang usage data and some error detection for sites with verified regional properties. For comprehensive hreflang validation, GSC needs to be supplemented with third-party tooling — see the Ahrefs workflow below.

Ahrefs Site Audit — Index Layer

hreflang error detection (I3): Site Audit → Localisation → hreflang. Run this report after every deployment that touches international page templates. The report categorises errors by type: “Missing return tag” is the highest-priority category — address these before anything else. “Non-canonical return URLs” identifies cases where the hreflang annotation references a URL that is not the canonical version of the page, which undermines the entire signal. “Incorrect language/region codes” lists malformed locale values that Google ignores silently. Export all error types and resolve them by template class rather than individually.

Thin page detection at scale (I6): Site Audit → Page Explorer → apply filters for URL pattern and content length. Set a word count filter of less than 200 or less than 300 depending on your site’s content baseline. Sort results by organic traffic (via GSC integration) ascending. The pages at the bottom — thin content and zero traffic — are the removal candidates. Before applying noindex at scale, confirm the affected pages are not receiving inbound links with ranking-relevant anchor text from external sources — thin pages carrying significant external equity may be worth developing rather than removing from the index.

Index Layer Recovery Timeline

Index-layer fixes require Googlebot to recrawl and re-evaluate directive logic before any recovery appears in GSC data. Unlike Crawl-layer fixes where recrawl is often sufficient, Index-layer corrections sometimes require multiple recrawl cycles before Google resolves its internal canonical selection or deindexes previously indexed URLs. Factor this into your monitoring schedule — checking for recovery after 48 hours on a canonical fix is premature by weeks.

| Mistake | Expected Recrawl Window | Expected Index Reflection | Verification Signal | Escalation Trigger |

|---|---|---|---|---|

| I1 — Canonical chains resolved | 3–14 days | 3–8 weeks for equity consolidation to affect rankings | GSC URL Inspection: Google-selected canonical now matches user-declared canonical | Canonical override persisting after 6 weeks with confirmed fix in place |

| I2 — Template-level noindex corrected | 24–72 hours after directive removed | 7–21 days for pages to re-enter index | Coverage “Excluded by noindex” count returning to pre-incident baseline | Count not declining after 14 days — check for secondary header-level directive still active |

| I3 — hreflang return tags and locale codes fixed | 3–14 days | 2–6 weeks for correct regional versions to stabilise in SERPs | Ahrefs hreflang error count declining; GSC showing correct locale serving for target regions | Wrong locale still appearing in target region SERPs after 6 weeks |

| I4 — Parameter variants canonicalised | 2–4 weeks for Google to re-evaluate parameter URL cluster | 4–10 weeks for indexation debt reduction visible in Coverage | GSC “Duplicate without user-selected canonical” count declining; clean URL confirmed as Google-selected canonical | Parameter variants still indexed after 10 weeks with confirmed canonical implementation |

| I5 — HTTP header canonical conflict resolved | 24–72 hours after CDN cache flush and header fix confirmed | 1–3 weeks for Google to re-evaluate preferred URL | curl -I on affected URLs: Link header canonical now matches on-page canonical; GSC URL Inspection alignment confirmed | Conflict persisting in curl header output after confirmed infrastructure fix — re-check CDN cache rules |

| I6 — Thin programmatic pages noindexed | 24–72 hours per URL after noindex directive applied | 7–28 days for pages to be removed from index | Coverage indexed page count for affected URL pattern declining week over week | Pages still indexed after 30 days — verify noindex is present in both HTML and HTTP response headers, not HTML only |

CRISD Layer 4 — Signal: Technical SEO Implementation Mistakes That Dilute or Misdirect Ranking Signals

The Index layer determines whether a page enters Google’s index and which URL is preferred. The Signal layer determines how much ranking potential that indexed page actually realises. These are separate problems with separate diagnostics. A page can be correctly indexed — right URL, no directive conflicts, clean canonical — and still systematically underperform because the signals pointing to it are diluted, split across multiple URLs, or actively contradicted by conflicting implementation layers.

Signal-layer failures are the most frequently misdiagnosed category in technical SEO. When a well-indexed page with solid content fails to rank where it should, the default explanation is content quality. Sometimes that’s correct. More often, the actual cause is an architecture problem: redirect chains leaking equity through avoidable hops, internal link patterns routing authority away from commercial pages, canonical consolidation applied too aggressively and stranding pages that should rank individually, or structured data conflicts producing schema outputs that conflict with what Google needs to trigger rich results.

None of these failures produce an alert. None show up in Coverage. They operate silently below the ranking surface, accumulating over months as migrations leave chains un-collapsed, content production adds internal links without architectural intent, and plugin updates quietly introduce schema conflicts that suppress rich result eligibility without surfacing a validation error. Diagnosing this layer correctly requires deliberately cross-referencing signal sources — equity flow, directive consistency, structured data output, and field performance data — rather than treating any one of them in isolation.

Mistake S1 — Structured Data Conflicts Across Multiple Implementations (P2)

What goes wrong: A page carries two or more structured data blocks declaring conflicting values for the same property. This happens most often on CMS platforms where multiple plugins independently generate JSON-LD: an SEO plugin outputs Article with one dateModified value, a caching plugin outputs a second Article block with a different date, and a review aggregator adds an AggregateRating that references a name value inconsistent with the first block’s headline. The result is a schema output that Google’s structured data parser must adjudicate rather than directly process. Rich result eligibility degrades or disappears entirely depending on which conflicting property Google attempts to use.

Why it happens: Each plugin was installed independently to solve a specific problem, and none of them were designed with awareness of what the others output. The SEO plugin handles primary entity markup. The WooCommerce integration adds product schema. A breadcrumb plugin adds its own BreadcrumbList that duplicates — and disagrees with — the breadcrumb output in the SEO plugin. Nobody audits the combined schema output on the live page because the individual plugin settings each look correct in isolation.

How to detect it: Paste target page URLs directly into Google’s Rich Results Test. When multiple schema blocks of the same type appear in the output, expand both and compare property values — specifically name, url, dateModified, datePublished, and author on Article types; price and availability on Product types. Any property that carries different values across two blocks of the same type is a conflict. In GSC, Enhancements reports surface validation errors and warnings at scale — a high error-to-implementation ratio on any schema type indicates systemic conflict rather than isolated misconfiguration.

How to fix it: Consolidate all schema output through a single implementation layer. Disable structured data output in every plugin except one designated schema handler, then audit the surviving output against the full property requirements for each schema type used on the site. Where a plugin cannot be disabled without losing other functionality, use its settings to suppress only the schema output while retaining other features. After consolidation, validate every affected template type through the Rich Results Test and confirm that GSC Enhancements error counts decline over the following two to three weeks.

Mistake S2 — Internal Link Equity Misallocation (P2)

What goes wrong: Internal links distribute authority through a site. When the majority of internal link equity flows to informational pages — blog posts, glossary entries, resource hubs — rather than to commercial pages carrying revenue impact, the ranking potential of transactional content is structurally constrained. The informational pages rank easily. The commercial pages that the business depends on are authority-starved despite sitting on the same domain. This is not a content gap problem. It is an architectural equity allocation problem that content improvements alone cannot resolve.

Why it happens: Editorial linking patterns develop organically over years without commercial intent architecture. Writers naturally link to informational content — definitions, related articles, resource pages — because it provides context for readers. Nobody audits the cumulative equity distribution effect of thousands of such linking decisions. Over time, the internal link graph becomes deeply biased toward informational content, and commercial pages sit at the periphery of the architecture receiving minimal equity from the rest of the site.

How to detect it: In Ahrefs Site Audit, run the Internal Link report and filter by page type using URL pattern matching. Compare average incoming internal link counts and estimated URL Rating between informational and commercial page types across the same domain. On content-heavy sites, a ratio exceeding 3:1 in favour of informational pages — in terms of both link count and equity — indicates structural misalignment. Cross-reference with GSC to confirm that commercial pages with strong topical relevance and solid external backlink profiles are nonetheless underranking relative to their authority signals. If they are, equity misallocation is the primary suspect.

How to fix it: Implement a deliberate internal link architecture review. Identify the 20 to 30 commercial pages carrying the highest revenue importance and audit their current inbound internal link count. For each, identify existing high-traffic informational pages with topical relevance and add contextual internal links from those pages to the commercial targets. Establish an editorial linking policy that requires consideration of commercial page link targets in all new content production. The effect accumulates slowly — expect four to twelve weeks before ranking movement — but it compounds permanently as new content continues to reinforce the corrected architecture.

Mistake S3 — Redirect Chains and Multi-Hop Equity Leakage (P2)

What goes wrong: A single 301 redirect passes the large majority of link equity from source to destination. Each additional hop in a redirect chain introduces further equity degradation. A three-hop chain — /old-url → /interim-url → /final-url — leaks equity at both intermediate steps. Every external backlink and internal link pointing to /old-url now passes a fraction of its original value to /final-url compared to what a direct link would deliver. On sites where key pages have accumulated significant external link equity through sequential migrations over several years, the cumulative leakage across multiple chains can meaningfully suppress ranking potential.

Why it happens: Redirect chains accumulate when migrations are implemented incrementally. The first migration redirects /old to /v2. Two years later, a second migration redirects /v2 to /current. Nobody updates the first redirect to point directly to /current because by that point, nobody has a complete inventory of what the first migration covered. Internal links pointing to /old and /v2 are similarly never updated. The chains extend silently across each migration cycle.

How to detect it: In Screaming Frog, go to Reports → Redirect Chains after a full crawl. This report lists every multi-hop redirect sequence discovered during the crawl, with the full chain path and hop count per sequence. Export the list and sort by the backlink profile of the originating URL — chains where the first URL carries significant external link equity are highest priority for resolution. Supplement with an Ahrefs Backlinks report filtered by “Redirected” link type to identify which external backlinks are currently landing on redirect-chain sources rather than on the final destination URL.

How to fix it: Update each redirect in a chain so it points directly to the final destination URL. For a three-hop chain, this means updating the /old-url redirect to point to /final-url directly, bypassing /interim-url entirely. Simultaneously update all internal links pointing to /old-url and /interim-url to reference /final-url directly — relying on the redirect consumes crawl budget unnecessarily and leaves the equity recovery incomplete. After updates, re-crawl with Screaming Frog to confirm zero multi-hop chains remain on priority URL sets.

Mistake S4 — Over-Consolidated Canonicals Stranding Ranking Potential (P1)

What goes wrong: Canonical consolidation is designed to concentrate equity from duplicate or near-duplicate variants onto a single preferred URL. When the consolidation scope is drawn too broadly — canonicalising distinct pages with independent ranking potential to a single target — the result is not equity consolidation but equity erasure. Backlinks pointing to the incorrectly canonicalised pages are credited to the canonical target, but the pages themselves lose their individual ranking potential entirely. Content that could rank for unique queries is permanently suppressed by a canonical pointing away from it.

Why it happens: Template-level canonical logic applied at scale introduces this failure when the URL matching pattern is broader than intended. A canonical rule designed to consolidate session-ID parameter variants like /product?session=abc to /product may inadvertently apply to /product-review and /product-comparison if the pattern match is not sufficiently specific. The canonical tag on every matched URL then points to /product, suppressing pages with distinct content and independent ranking potential across the entire matched set.

How to detect it: In Screaming Frog, Canonicals tab → filter “Canonical Points to Different Page.” Review the full list against your intended canonical scope. Any page in this list that was not explicitly intended to canonicalise away from itself represents a potential over-consolidation incident. Cross-reference with GSC impressions for the affected URLs — pages with zero impressions over six months that are canonicalising to another URL warrant investigation into whether that suppression is intentional. If they previously had impressions before a canonical implementation and have had zero since, the canonical is the cause.

How to fix it: Correct the template-level canonical logic to restrict its scope to the intended URL pattern. Any page incorrectly canonicalised away from itself needs a self-referencing canonical restored. After correcting the implementation, submit affected URLs through GSC URL Inspection to accelerate re-evaluation. Recovery of individual ranking signals after an over-consolidation fix is slow — Google must recrawl the page, re-evaluate it as an independent URL, and rebuild its signal attribution from scratch. Expect six to twelve weeks before meaningful ranking recovery on affected pages.

Mistake S5 — Meta Robots vs X-Robots-Tag Directive Conflicts (P1)

What goes wrong: A page carries contradictory robots directives across two layers: the on-page <meta name="robots"> tag permits indexation and following, while an X-Robots-Tag HTTP response header restricts one or both. Google resolves the conflict by applying the more restrictive directive. The practical consequence is that a page can appear fully permissive in its HTML source — no visible noindex, no nofollow — while an infrastructure-layer header is actively suppressing its indexation or preventing equity from passing through its outbound links. The failure is invisible to any audit that examines only page source.

Why it happens: The same infrastructure change vectors that cause header-level canonical conflicts — CDN rule updates, reverse proxy configurations, server-side middleware — can inject X-Robots-Tag headers independently of CMS-level robots settings. A security or performance layer added to handle specific URL patterns may apply response headers more broadly than intended. It’s also common to find X-Robots-Tag: noindex headers persisting on URLs that were previously restricted during development, where the staging configuration was partially cleaned up but the header injection rule remained active at the server or CDN level.

How to detect it: Run curl -I [URL] on representative pages across every major template type. Look for X-Robots-Tag in the response headers. Any value in this header — noindex, nofollow, none, noarchive — that conflicts with the intended directive for that page type requires immediate investigation. In Screaming Frog, configure Response Header extraction for X-Robots-Tag under Configuration → Spider → Response Headers before crawling. Post-crawl, export the Response Headers report and filter for any URL carrying a restrictive X-Robots-Tag value alongside an indexable on-page meta robots tag — these represent active conflicts.

How to fix it: Remove or correct the header-level directive at its source — the CDN configuration, middleware rule, or server header injection policy generating the conflict. Fixing the on-page meta tag while the header override remains active achieves nothing. After the infrastructure fix, flush the CDN cache, re-run curl -I to confirm header removal, and submit affected URLs for recrawl. Add X-Robots-Tag header inspection to post-deploy verification for any infrastructure change that touches response header configuration.

Mistake S6 — Open Graph vs JSON-LD Mismatch Impacting Rich Results (P3)